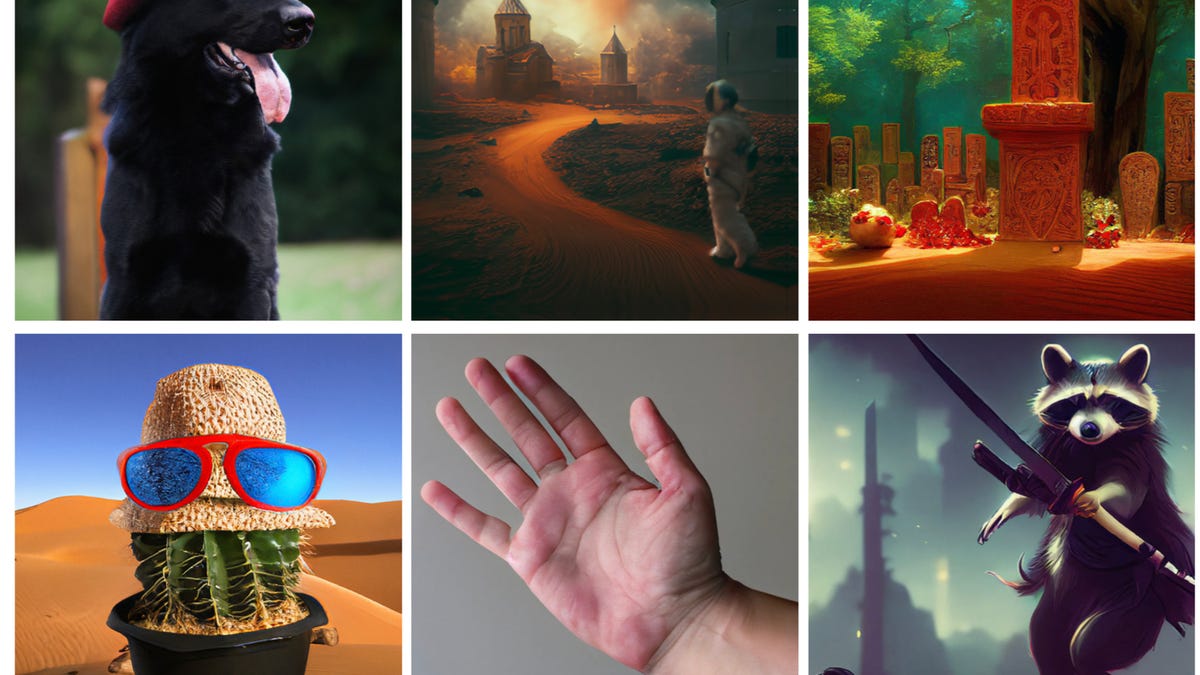

Utilizing a fraction of the GPU compute, Meta’s CM3Leon achieves photographs with complicated mixtures of objects, and hard-to-render issues like fingers and textual content, and at a degree that achieves a brand new state-of-the-art on the benchmark FID rating. Meta 2023

For the previous a number of years, the world has been wowed by synthetic intelligence packages that generate photographs while you kind a phrase, packages akin to Secure Diffusion and DALL*E that can output photographs in any model you need and that may be subtly diverse by utilizing completely different prompted phrases.

Usually, these packages have relied on manipulating instance photographs by performing a technique of compression on the instance photographs, after which de-compressing them to get better the unique, whereby they study the foundations of picture creation, a course of known as diffusion.

Additionally: Generative AI: Simply do not name it an ‘artist’ say students in Science journal

Work by Meta launched this previous week suggests one thing far easier: a picture will be handled as merely a set of codes like phrases, and will be dealt with a lot the way in which ChatGPT manipulates strains of textual content.

It is likely to be the case that language is all you want in AI.

The result’s a program that may deal with complicated topics with a number of components (“A teddy bear sporting a motorbike helmet and cape is using a motorbike in Rio de Janeiro with Dois Irmãos within the background.”) It may possibly render tough objects akin to fingers and textual content, stuff that tends to finish up distorted in lots of image-generation packages. It may possibly carry out different duties, like describing intimately a given picture, or altering a given picture with precision. And it may be finished with a fraction of the computing energy normally wanted.

Within the paper “Scaling Autoregressive Multi-Modal Fashions: Pre-training and Instruction Tuning,” by Lilu Yu and colleagues at Fb AI Analysis (FAIR), posted on Meta’s AI analysis website, the important thing perception is to make use of photographs as in the event that they have been phrases. Or, somewhat, textual content and picture perform collectively as steady sentences utilizing a “codebook” to interchange the pictures with tokens.

“Our method extends the scope of autoregressive fashions, demonstrating their potential to compete with and exceed diffusion fashions when it comes to cost-effectiveness and efficiency,” write Yu and staff.

Additionally: This new expertise might blow away GPT-4 and all the pieces prefer it

The concept of a codebook goes again to work from 2021 by Patrick Esser and colleagues at Heidelberg College. They tailored a long-standing type of neural community, referred to as a convolutional neural community (or CNN), which is skilled at dealing with picture recordsdata. By coaching an AI program referred to as a generative adversarial community, or GAN, which may fabricate photographs, the CNN was made to affiliate features of a picture, akin to edges, with entries in a codebook.”

These indices can then be predicted the way in which phrases in a language mannequin akin to ChatGPT predicts the subsequent phrase. Excessive-resolution photographs grow to be sequences of index predictions somewhat than pixel prediction, which is a far much less compute-intense operation.

CM3Leon’s enter is a string of tokens, the place photographs are lowered to simply one other token in textual content type, a reference to a codebook entry. Meta 2023

Utilizing the codebook method, Meta’s Yu and colleagues assembled what’s referred to as CM3Leon, pronounced “chameleon,” a neural internet that may be a giant language mannequin in a position to deal with a picture codebook.

CM3Leon builds on a previous program that was launched final yr by FAIR — CM3, for “Causally-Masked Multimodal Modeling.” It is like ChatGPT in that it’s a “Transformer”-style program, skilled to foretell the subsequent factor in a sequence — a “decoder-only transformer structure” — nevertheless it combines that with “masking” elements of what is typed, just like Google’s BERT program, in order that it may well additionally achieve context from what would possibly come later in a sentence.

CM3Leon builds on CM3 by including to it what’s referred to as retrieval. Retrieval, which is changing into more and more vital in giant language fashions, means this system can “telephone residence,” if you’ll, to succeed in right into a database of paperwork and retrieve what could also be related because the output of this system. It is a approach to have entry to reminiscence in order that the neural internet’s weights, or parameters, do not should bear the burden of carrying all the knowledge essential to make predictions.

Additionally: Microsoft, TikTok give generative AI a kind of reminiscence

In line with Yu and staff, their database is a vector “knowledge financial institution” that may be looked for each picture and textual content paperwork: “We break up the multi-modal doc right into a textual content half and a picture half, encode them individually utilizing off-the-shelf frozen CLIP textual content and picture encoders, after which common the 2 as a vector illustration of the doc.”

In a novel twist, the researchers use because the coaching dataset not web photographs however a group of seven million licensed photographs from Shutterstock, the inventory images firm. “In consequence, we are able to keep away from considerations associated to picture possession and attribution, with out sacrificing efficiency.”

The Shutterstock photographs retrieved from the database are used within the pre-training stage of CM3Leon to develop the capabilities of this system. It is the identical method ChatGPT and different giant language fashions are pre-trained. However, an additional stage then takes place whereby the enter and output of the pre-trained CM3Leon are each fed again into the mannequin to additional refine it, an method referred to as “supervised fine-tuning,” or SFT.

Additionally: The most effective AI artwork turbines: DALL-E 2 and different enjoyable alternate options to strive

The results of all this can be a program that achieves the state-of-the-art for a wide range of text-image duties. Their major check is Microsoft COCO Captions, a dataset revealed in 2015 by Xinlie Chen of Carnegie Mellon College and colleagues. A program is judged by how nicely it replicates photographs within the dataset, based on what’s referred to as an FID rating, a resemblance measure that was launched in 2018 by Martin Heusel and colleagues at Johannes Kepler College Linz in Austria.

Write Yu and staff: “The CM3Leon-7B mannequin units a brand new state-of-the-art FID rating of 4.88, whereas solely utilizing a fraction of the coaching knowledge and compute of different fashions akin to PARTI.” The “7B” half refers back to the CM3Leon program having 7 billion neural parameters, a typical measure of the size of this system.

A desk exhibits how the CM3Leon mannequin will get a greater FID rating (decrease is best) with far much less coaching knowledge, and with fewer parameters than different fashions, which is similar as saying much less compute depth:

One chart exhibits how the CM3Leon reaches that superior FID rating utilizing fewer coaching hours on Nvidia A100 GPUs:

What is the huge image? CM3Leon, utilizing a single prompted phrase, cannot solely generate photographs however can even establish objects in a given picture, or generate captions from a given picture, or do any variety of different issues juggling textual content and picture. It is clear that the wildly standard apply of typing stuff right into a immediate is changing into a brand new paradigm. The identical gesture of typing will be broadly employed for a lot of duties with numerous “modalities,” which means, completely different sorts of information — picture, sound, audio, and so forth.

Additionally: This new AI device transforms your doodles into high-quality photographs

Because the authors conclude, “Our outcomes help the worth of autoregressive fashions for a broad vary of textual content and picture duties, encouraging additional exploration for this method.”

Unleash the Energy of AI with ChatGPT. Our weblog supplies in-depth protection of ChatGPT AI expertise, together with newest developments and sensible functions.

Go to our web site at https://chatgptoai.com/ to study extra.