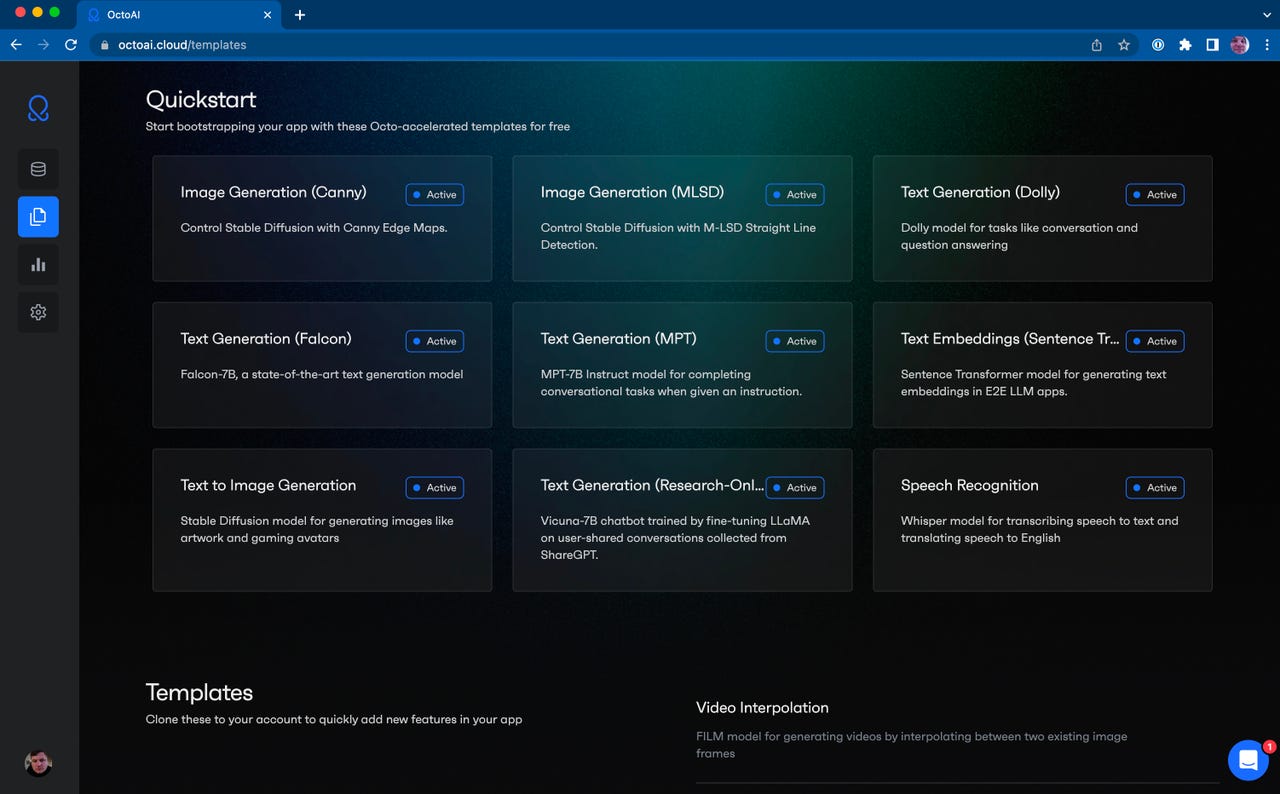

The OctoAI compute service presents templates to make use of acquainted generative AI packages comparable to Steady Diffusion 2.1, however prospects may also add their very own fully {custom} fashions. OctoML

The fervent curiosity in working synthetic intelligence packages, particularly of the generative selection, comparable to OpenAI’s ChatGPT, is producing a cottage business of companies that may streamline the work of placing AI fashions into manufacturing.

Additionally: 92% of programmers are utilizing AI instruments, says GitHub developer survey

On Wednesday, startup OctoML, which lower its enamel bettering AI efficiency throughout various pc chips and methods, unveiled a service to clean the work of serving up predictions, the “inference” portion of AI, referred to as OctoAI Compute Service. Builders who need to run giant language fashions and the like can add their mannequin to the service, and OctoAI takes care of the remaining.

That would, says the corporate, convey much more events into AI serving, not simply conventional AI engineers.

“The viewers for our product is basic software builders that need to add AI performance to their purposes, and add giant language fashions for query answering and such, with out having to really put these fashions in manufacturing, which remains to be very, very arduous,” mentioned OctoML co-founder and CEO Luis Ceze in an interview with ZDNET.

Generative AI, mentioned Ceze, is even tougher to serve up than another types of AI.

“These fashions are giant, they use a whole lot of compute, it requires a whole lot of infrastructure work that we do not suppose builders ought to have to fret about,” he mentioned.

Additionally: OctoML CEO: MLOps must step apart for DevOps

OctoAI, mentioned Ceze, is “principally a platform that usually would have been constructed by somebody utilizing a proprietary mannequin” comparable to OpenAI for ChatGPT. “We now have constructed a platform that’s equal, however that allows you to run open-source fashions.

“We would like individuals to give attention to constructing their apps, not constructing their infrastructure, to let individuals be increasingly more inventive.”

The service, which is now out there for basic entry, has been in restricted entry with some early prospects 4 a few month, mentioned Ceze, “and the reception has exceeded everybody’s expectations by a really extensive margin.”

Though the main target of OctoAI is inference, this system can carry out some restricted quantity of what is often called “coaching,” the preliminary a part of AI the place a neural internet is developed by being given parameters for a aim to optimize.

Additionally: How ChatGPT can rewrite and enhance your current code

“We are able to do fine-tuning, however not coaching from scratch,” mentioned Ceze. High-quality-tuning refers to follow-on work that occurs after preliminary coaching however earlier than inference, to regulate a neural community program to the particularities of a website, for instance.

“We would like individuals to give attention to constructing their apps not constructing their infrastructure, to let individuals be increasingly more inventive,” says OctoML co-founder and CEO Luis Ceze. OctoML

That emphasis has been a “deliberate alternative” of OctoML’s, mentioned Ceze, given inference is the extra frequent job in AI.

“Our work has been centered on inferencing as a result of I’ve all the time had a robust conviction that for any profitable mannequin, you are going to do much more inferencing than coaching, and this platform is admittedly about placing fashions into manufacturing, and in manufacturing, and for the lifetime of fashions, nearly all of the cycles go to inference.”

OctoML’s crew is well-versed within the intricacies of placing neural networks into manufacturing given their central position in growing the open-source undertaking Apache TVM. Apache TVM is a software program compiler that operates in another way from different compilers. As an alternative of turning a program into typical chip directions for a CPU or GPU, it research the “graph” of compute operations in a neural internet and figures out how greatest to map these operations to {hardware} based mostly on dependencies between the operations.

Additionally: Methods to use ChatGPT to create an app

The OctoML know-how that got here out of Apache TVM, referred to as the Octomizer, is “like a element of an MLOps, or DevOps circulate,” mentioned Ceze.

The corporate “took all the pieces we have discovered” from Apache TVM and from constructing the Octomizer, mentioned Ceze, “and produced an entire new product, OctoAI, that principally wraps all of those optimization companies, after which full serving, and fine-tuning, a platform that allows customers to take AI into manufacturing and even run it for them.”

Additionally: AI and DevOps, mixed, might assist unclog developer creativity

The service “mechanically accelerates the mannequin,” chooses the {hardware}, and “regularly strikes to what’s one of the simplest ways to run your mannequin, in a really, very turnkey method.”

Utilizing CURL on the commend line is a straightforward option to get began with templated fashions. OctoML

The consequence for patrons, mentioned Ceze, is that the shopper not must be explicitly an ML engineer; they could be a generalist software program developer.

The service lets prospects both convey their very own mannequin, or draw upon a “a set of curated, high-value fashions,” together with Steady Diffusion 2.1, Dolly 2, LLaMa, and Whisper for audio transcription.

Additionally: Meet the post-AI developer: Extra inventive, extra business-focused

“As quickly as you log in, as a brand new consumer, you’ll be able to play with the fashions immediately, you’ll be able to clone them and get began very simply.” The corporate may also help fine-tune such open-source packages to a buyer’s wants, “or you’ll be able to are available in together with your fully-custom mannequin.”

To this point, the corporate sees “a really even combine” between open-source fashions and their very own {custom} fashions.

The preliminary launch accomplice for infrastructure for OctoAI is Amazon’s AWS, which OctoML is reselling, however the firm can be working with some prospects who need to use capability they’re already procuring from public cloud.

Importing a fully-custom mannequin is another choice. OctoML

For the delicate matter of the best way to add buyer knowledge to the cloud for, say, fine-tuning, “We began from the get-go with SOC2 compliance, so we’ve got very strict ensures of blending consumer knowledge with different customers’ knowledge,” mentioned Ceze, referring to the SOC2 voluntary compliance commonplace developed by the American Institute of CPAs. “We now have all that, however we’ve got prospects asking for extra now for personal deployments, the place we truly carve out situations of the system and run it in their very own VPC [virtual private cloud.]”

“That isn’t being launched immediately, however it’s within the very, very short-term roadmap as a result of prospects are asking for it.”

Additionally: Low-code platforms imply anybody is usually a developer — and possibly a knowledge scientist, too

OctoML goes up towards events giant and small who additionally sense the large alternative within the surge in curiosity to serve up predictions with AI packages since ChatGPT.

MosaicML, one other younger startup with deep technical experience, in Could launched MosaicML Inference, deploying giant fashions. And Google Cloud final month unveiled G2, which it says is “purpose-built for giant inference AI workloads like generative AI.”

Ceze mentioned there are a number of issues to distinguish OctoAI from rivals. “One of many issues that we provide that’s distinctive is that we’re centered on fine-tuning and inference,” fairly than “a product that has inference merely as a element.” OctoAI, he mentioned, additionally distinguishes itself by being “purpose-built for builders to in a short time construct purposes” fairly than having to be AI engineers.

Additionally: AI design modifications on the horizon from open-source Apache TVM and OctoML

“Constructing an inference focus is admittedly arduous,” noticed Ceze, “you have got very strict necessities” together with “very strict uptime necessities.” When working a coaching session, “if in case you have faults you’ll be able to get better, however in inference, the one request you miss might make a consumer upset.”

“I can think about individuals coaching the fashions with MosaicML after which bringing them to OctoML to serve up,” added Ceze.

OctoML is just not but publishing pricing, he mentioned. The service initially will enable potential prospects to make use of it at no cost, from the sign-up web page, with an preliminary allotment of free compute time, and “then pricing goes to be very clear when it comes to here’s what it prices so that you can use compute, and here is how a lot efficiency we’re supplying you with, in order that it turns into very, very clear, not simply the compute time, and also you’re getting extra work out of the compute that you simply’re paying for.” That will probably be priced, finally, on a consumption foundation, he mentioned, the place prospects pay just for what they use.

Additionally: The 5 greatest dangers of generative AI, in keeping with an knowledgeable

There is a distinct risk that a whole lot of AI mannequin serving might transfer from being an unique, premium service at present to being a commodity.

“I truly suppose that AI performance is more likely to be commoditized,” mentioned Ceze. “It will be thrilling for customers, identical to having spell checking or grammar checking, simply think about each textual content interface having generative AI.”

On the similar time, “the bigger fashions will nonetheless be capable of do some issues the commodity fashions cannot,” he mentioned.

Scale signifies that complexity of inference will probably be a difficult drawback to unravel for a while, he mentioned. Massive language fashions of 70 billion or extra parameters generate 100 “giga-ops,” 100 billion operations per second, for each phrase that they generate, famous Ceze.

Additionally: This new know-how might blow away GPT-4 and all the pieces prefer it

“That is many orders of magnitude past any workload that is ever run,” he added, “And it requires a whole lot of work to make a platform scale to a worldwide degree.

“And so I feel it should convey enterprise to a whole lot of people, even when it is commoditized, as a result of we’re going to must squeeze efficiency and compute capabilities from all the pieces we’ve got within the cloud and GPUs and CPUs and stuff on the sting.”

Unleash the Energy of AI with ChatGPT. Our weblog supplies in-depth protection of ChatGPT AI know-how, together with newest developments and sensible purposes.

Go to our web site at https://chatgptoai.com/ to study extra.