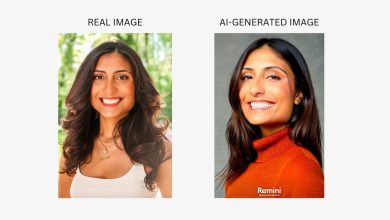

Naveen Rao, [left] MosaicML co-founder and CEO, and Hanlin Tang, co-founder and CTO. The corporate’s coaching applied sciences are being utilized to “constructing consultants,” utilizing massive language fashions extra effectively to deal with company knowledge. MosaicML

On Monday, Databricks, a ten-year-old software program maker primarily based in San Francisco, introduced it will purchase MosaicML, a three-year-old San Francisco-based startup centered on taking AI past the lab, for $1.3 billion.

The deal is an indication not solely of the fervor for property within the white-hot generative synthetic intelligence market, but in addition an indication of the altering nature of the fashionable cloud database market.

Additionally: What’s ChatGPT and why does it matter? Here is what you want to know

MosaicML, staffed with semiconductor veterans, has constructed a program referred to as Composer that makes it simple and inexpensive to take any commonplace model of AI applications similar to OpenAI’s GPT and dramatically pace up the event of that program, the start section referred to as the coaching of a neural community.

The corporate this yr launched cloud-based business companies the place companies can for a payment each practice a neural community and carry out inference, the rendering of predictions in response to person queries.

Nonetheless, the extra profound aspect of MosaicML’s method implies that entire areas of working with knowledge — similar to the standard relational database — might be utterly reinvented.

“Neural community fashions can truly be considered nearly as a database of types, particularly after we’re speaking about generative fashions,” Naveen Rao, co-founder and CEO of MosaicML, advised ZDNET in an interview previous to the deal.

“At a really excessive degree, what a database is, is a set of endpoints which can be sometimes very structured, so sometimes rows and columns of some kind of knowledge, after which, primarily based upon that knowledge, there’s a schema on which you arrange it,” defined Rao.

Not like a conventional relational database, similar to Oracle, or a doc database, similar to MongdoDB, stated Rao, the place the schema is preordained, with a big language mannequin, “the schema is found from [the data], it produces a latent illustration primarily based upon the info, it is versatile.” And the question can be versatile, in contrast to fastened lookups right into a database similar to SQL, which dominates a conventional database.

Additionally: Serving Generative AI simply bought loads simpler with OctoML’s OctoAI

“So, principally,” added Rao, “You took the database, loosened up the constraints on its inputs, schema, and its outputs, however it’s a database.” Within the kind of a giant language mannequin, such a database, furthermore, can deal with massive blobs of knowledge which have eluded conventional structured knowledge shops.

“I can ingest a complete bunch of books by an writer, and I can question concepts and relationships inside these books, which is one thing you’ll be able to’t do with simply textual content,” stated Rao.

Utilizing intelligent prompting in an LLM, the immediate context offers versatile methods to question the database. “Whenever you immediate it the correct manner, you may get it to supply one thing due to the context created by the immediate,” defined Rao. “And, so, you’ll be able to question points of the unique knowledge from that, which is a reasonably large idea that may apply to many issues, and I believe that is truly why these applied sciences are essential.”

The MosaicML work is a part of a broad motion to make so-called generative AI applications like ChatGPT extra related for sensible enterprise functions.

Additionally: Why open supply is important to allaying AI fears, in line with Stability.ai founder

For instance, Snorkel, a three-year-old AI startup primarily based in San Francisco, affords instruments that permit firms write capabilities which robotically create labeled coaching knowledge for so-called basis fashions — the most important neural nets that exist, similar to OpenAI’s GPT-4.

And one other startup, OctoML, final week unveiled a service to easy the work of serving up inference.

The acquisition by Databricks brings MosaicML right into a vibrant non-relational database market that has for a number of years been shifting the paradigm of an information retailer past row and column.

That features the info lake of Hadoop, methods to function on it, and the map and scale back paradigm of Apache Spark, of which Databricks is the main proponent. The market additionally contains streaming knowledge applied sciences, the place the shop of knowledge can in some sense be within the circulate of knowledge itself, referred to as “knowledge in movement,” such because the Apache Kafka software program promoted by Confluent.

Additionally: One of the best AI chatbots: ChatGPT and different noteworthy alternate options

MosaicML, which raised $64 million previous to the deal, appealed to companies with language fashions that will be not a lot the generalists of the ChatGPT kind however extra centered on domain-specific enterprise use instances, what Rao referred to as “constructing consultants.”

The prevailing pattern in synthetic intelligence, together with generative AI, has been to construct applications which can be increasingly more common, able to dealing with duties in all types of domains, from enjoying video video games to partaking in chat to writing poems, captioning photos, writing code, and even controlling a robotic arm stacking blocks.

The fervor over ChatGPT demonstrates how compelling such a broad program will be when it may be wielded to deal with any variety of requests.

Additionally: AI startup Snorkel preps a brand new sort of skilled for enterprise AI

And but, using AI within the wild, by people and establishments, is more likely to be dominated by approaches much more centered as a result of they are often much more environment friendly.

“I can construct a smaller mannequin for a specific area that enormously outperforms a bigger mannequin,” Rao advised ZDNET.

MosaicML had made a reputation for itself with efficiency achievements by demonstrating its prowess in the MLPerf benchmark assessments that present how briskly a neural community will be skilled. Among the many secrets and techniques to rushing up AI is the remark that smaller neural networks, constructed with larger focus, will be extra environment friendly.

That concept was explored extensively in a 2019 paper by MIT scientists Jonathan Frankle and Michael Carbin that received a finest paper award that yr on the Worldwide Convention on Studying Representations. The paper launched the “lottery ticket speculation,” the notion that each large neural web incorporates “sub-networks” that may be simply as correct as the full community, however with much less compute effort.

Additionally: Six expertise you want to turn out to be an AI immediate engineer

Frankle and Carbin have been advisors to MosaicML.

MosaicML additionally attracts explicitly on methods explored by Google’s DeepMind unit that present there’s an optimum stability between the quantity of coaching knowledge and the scale of a neural community. By boosting the quantity of coaching knowledge by as a lot as double, it is attainable to make a smaller community rather more correct than an even bigger one of many similar sort.

All of these efficiencies are encapsulated by Rao in what he calls a sort of Moore’s Legislation of the speed-up of networks. Moore’s Legislation is the semiconductor rule of thumb which posited, roughly, that the quantity of transistors in a chip would double each 18 months, for a similar value. That is the financial miracle that made attainable the PC revolution, adopted by the smartphone revolution.

Additionally: Google, Nvidia cut up high marks in MLPerf AI coaching benchmark

In Rao’s model, neural nets can turn out to be 4 instances sooner with each era, simply by making use of the tips of sensible compute with the MosaicML Composer instrument.

A number of stunning insights come from such an method. One, opposite to the oft-repeated phrase that machine studying types of AI require huge quantities of knowledge, it might be that smaller knowledge units can work properly if utilized within the optimum stability of knowledge and mannequin à la DeepMind’s work. In different phrases, actually large knowledge is probably not higher knowledge.

Not like gigantic generic neural nets similar to GPT-3, which is skilled on every thing on the Web, smaller networks will be the repository of the distinctive data of an organization about its area.

“Our infrastructure nearly turns into the back-end for constructing most of these networks on folks’s knowledge,” defined Rao. “And there is a entire motive why folks must construct their very own fashions.”

Additionally: Who owns the code? If ChatGPT’s AI helps write your app, does it nonetheless belong to you?

“For those who’re Financial institution of America, or should you’re the intelligence neighborhood, you’ll be able to’t use GPT-3 as a result of it is skilled on Reddit, it is skilled a bunch of stuff that may even have personally identifiable data, and it may need stuff that hasn’t been explicitly permitted for use,” stated Rao.

For that motive, MosaicML has been a part of the push to make open-source fashions of enormous language fashions out there, in order that prospects know what sort of program is performing on their knowledge. It is a view shared by different leaders in generative AI, similar to Stability.ai founder and CEO Emad Mostaque, who in Might advised ZDNET, “There isn’t any manner you should use black-box fashions” for the world’s most respected knowledge, together with company knowledge.

MosaicML final Thursday launched as open supply their newest model of a language mannequin — one containing 30 billion parameters, or neural weights — referred to as MPT-30B. The corporate claims MPT-30B surpasses the standard of OpenAI’s GPT-3. For the reason that firm’s introduction of open-source language fashions in early Might, it has had over two million downloads of the language fashions, it stated.

Though robotically discovering schema could show fruitful for database innovation, it is essential to keep in mind that giant language fashions nonetheless have points similar to hallucinations, the place this system will produce incorrect solutions whereas guaranteeing they’re actual.

Additionally: ChatGPT vs. Bing Chat: Which AI chatbot is healthier for you?

“Individuals do not truly perceive, if you ask one thing of ChatGPT, it isn’t appropriate many instances, and typically it sounds so appropriate, like a very good bullsh*t artist,” stated Rao.

“Databases have an expectation of absolute correctness, of predictability,” primarily based on “a number of issues which have been engineered over the past 30, 40 years within the database area that should be true, or not less than principally true, for some sort of new manner of doing it,” noticed Rao.

“Individuals take a look at it [large language models] like it could possibly clear up all the issues they’ve had,” stated Rao of enterprise curiosity. “Let’s work out the nuts and bolts of really getting there.”

Unleash the Energy of AI with ChatGPT. Our weblog supplies in-depth protection of ChatGPT AI expertise, together with newest developments and sensible purposes.

Go to our web site at https://chatgptoai.com/ to study extra.