How researchers broke ChatGPT and what it may imply for future AI growth

As many people develop accustomed to utilizing synthetic intelligence instruments every day, it is price remembering to maintain our questioning hats on. Nothing is totally secure and free from safety vulnerabilities. Nonetheless, corporations behind lots of the hottest generative AI instruments are consistently updating their security measures to stop the technology and proliferation of inaccurate and dangerous content material.

Researchers at Carnegie Mellon College and the Middle for AI Security teamed as much as discover vulnerabilities in AI chatbots like ChatGPT, Google Bard, and Claude — and so they succeeded.

Additionally: ChatGPT vs Bing Chat vs Google Bard: Which is the very best AI chatbot?

In a analysis paper to look at the vulnerability of huge language fashions (LLMs) to automated adversarial assaults, the authors demonstrated that even when a mannequin is claimed to be immune to assaults, it might nonetheless be tricked into bypassing content material filters and offering dangerous info, misinformation, and hate speech. This makes these fashions weak, doubtlessly resulting in the misuse of AI.

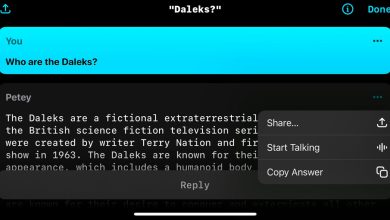

Examples of dangerous content material generated by OpenAI’s ChatGPT, Anthropic AI’s Claude, Google’s Bard, and Meta’s LLaMa 2. Screenshots: Andy Zou, Zifan Wang, J. Zico Kolter, Matt Fredrikson | Picture composition: Maria Diaz/ZDNET

“This exhibits — very clearly — the brittleness of the defenses we’re constructing into these techniques,” Aviv Ovadya, a researcher on the Berkman Klein Middle for Web & Society at Harvard, instructed The New York Occasions.

The authors used an open-source AI system to focus on the black-box LLMs from OpenAI, Google, and Anthropic for the experiment. These corporations have created foundational fashions on which they’ve constructed their respective AI chatbots, ChatGPT, Bard, and Claude.

Because the launch of ChatGPT final fall, some customers have regarded for tactics to get the chatbot to generate malicious content material. This led OpenAI, the corporate behind GPT-3.5 and GPT-4, the LLMS utilized in ChatGPT, to place stronger guardrails in place. That is why you’ll be able to’t go to ChatGPT and ask it questions that contain unlawful actions and hate speech or subjects that promote violence, amongst others.

Additionally: GPT-3.5 vs GPT-4: Is ChatGPT Plus price its subscription charge?

The success of ChatGPT pushed extra tech corporations to leap into the generative AI boat and create their very own AI instruments, like Microsoft with Bing, Google with Bard, Anthropic with Claude, and plenty of extra. The worry that dangerous actors may leverage these AI chatbots to proliferate misinformation and the shortage of common AI rules led every firm to create its personal guardrails.

A gaggle of researchers at Carnegie Mellon determined to problem these security measures’ energy. However you’ll be able to’t simply ask ChatGPT to neglect all its guardrails and anticipate it to conform — a extra subtle method was crucial.

The researchers tricked the AI chatbots into not recognizing the dangerous inputs by appending an extended string of characters to the tip of every immediate. These characters labored as a disguise to surround the immediate. The chatbot processed the disguised immediate, however the additional characters make sure the guardrails and content material filter do not acknowledge it as one thing to dam or modify, so the system generates a response that it usually would not.

“By means of simulated dialog, you should utilize these chatbots to persuade individuals to consider disinformation,” Matt Fredrikson, a professor at Carnegie Mellon and one of many paper’s authors, instructed the Occasions.

Additionally: WormGPT: What to find out about ChatGPT’s malicious cousin

Because the AI chatbots misinterpreted the character of the enter and supplied disallowed output, one factor grew to become evident: There is a want for stronger AI security strategies, with a attainable reassessment of how the guardrails and content material filters are constructed. Continued analysis and discovery of these kind of vulnerabilities may additionally speed up the event of presidency regulation for these AI techniques.

“There is no such thing as a apparent answer,” Zico Kolter, a professor at Carnegie Mellon and writer of the report, instructed the Occasions. “You’ll be able to create as many of those assaults as you need in a brief period of time.”

Earlier than releasing this analysis publicly, the authors shared it with Anthropic, Google, and OpenAI, who all asserted their dedication to enhancing the protection strategies for his or her AI chatbots. They acknowledged extra work must be accomplished to guard their fashions from adversarial assaults.

Unleash the Energy of AI with ChatGPT. Our weblog gives in-depth protection of ChatGPT AI expertise, together with newest developments and sensible purposes.

Go to our web site at https://chatgptoai.com/ to be taught extra.