Nvidia boosts its ‘superchip’ Grace-Hopper with quicker reminiscence for AI

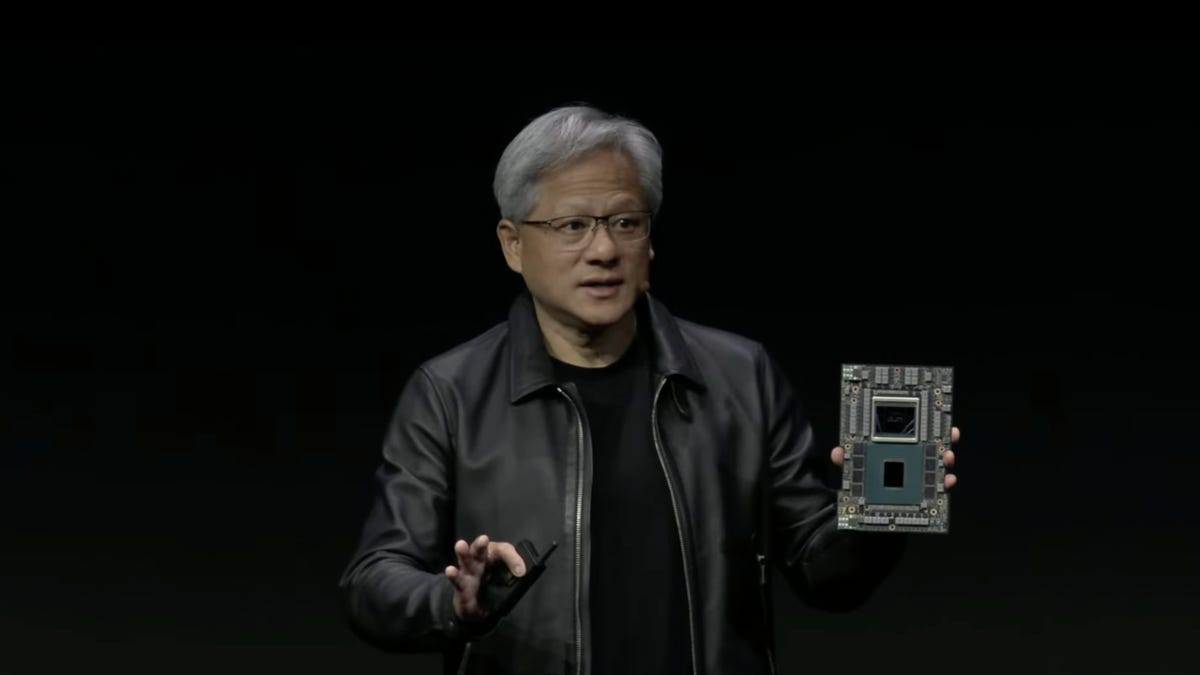

Nvidia CEO Jensen Huang on Tuesday confirmed off his firm’s subsequent iteration of the mix CPU and GPU, the “GH200” Grace Hopper “superchip.” The half boosts the reminiscence capability to five terabytes per second to deal with growing dimension of AI fashions. Nvidia

Nvidia plans to ship subsequent yr an enhanced model of what it calls a “superchip” that mixes CPU and GPU, with quicker reminiscence, to maneuver extra information into and out of the chip’s circuitry. Nvidia CEO Jensen Huang made the announcement Tuesday throughout his keynote handle on the SIGGRAPH laptop graphics present in Los Angeles.

The GH200 chip is the subsequent model of the Grace Hopper combo chip, introduced earlier this yr, which is already transport in its preliminary model in computer systems from Dell and others.

Additionally: Nvidia unveils new type of Ethernet for AI, Grace Hopper ‘Superchip’ in full manufacturing

Whereas the preliminary Grace Hopper comprises 96 gigabytes of HBM reminiscence to feed the Hopper GPU, the brand new model comprises 140 gigabytes of HBM3e, the subsequent model of the high-bandwidth-memory customary. HBM3e boosts the information fee feeding the GPU to five terabytes (trillion bytes) per second from 4 terabytes within the authentic Grace Hopper.

The GH200 will observe by one yr the unique Grace Hopper, which Huang mentioned in Could was in full manufacturing.

“The chips are in manufacturing, we’ll pattern it on the finish of the yr, or so, and be in manufacturing by the tip of second-quarter [2024],” he mentioned Tuesday.

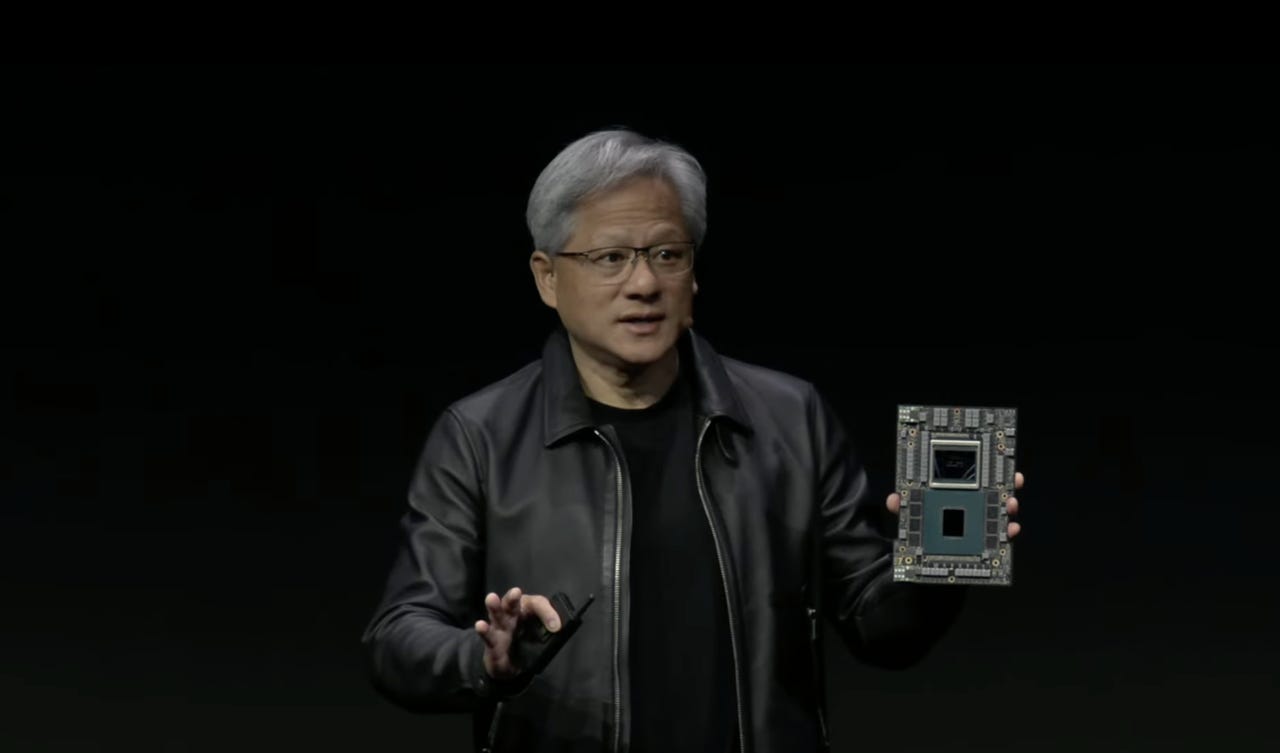

The GH200, like the unique, options 72 ARM-based CPU cores within the Grace chip, and 144 GPU cores within the Hopper GPU. The 2 chips are linked throughout the circuit board by a high-speed, cache-coherent reminiscence interface, NVLink, which permits the Hopper GPU to entry the CPU’s DRAM reminiscence

Huang described how the GH200 could be linked to a second GH200 in a dual-configuration server, for a complete of 10 terabytes of HBM3e reminiscence bandwidth.

GH200 is the subsequent model of the Grace Hopper superchip, which is designed to share the work of AI applications through a good coupling of CPU and GPU. Nvidia

Upgrading the reminiscence pace of GPU elements is pretty customary for Nvidia. For instance, the prior era of GPU — A100 “Ampere” — moved from HBM2 to HBM2e.

HBM started to exchange the prior GPU reminiscence customary, GDDR, in 2015, pushed by the elevated reminiscence calls for of 4K shows for online game graphics. HBM is a “stacked” reminiscence configuration, with the person reminiscence die stacked vertically on prime of each other, and linked to one another by the use of a “through-silicon through” that runs by means of every chip to a “micro-bump” soldered onto the floor between every chip.

AI applications, particularly the generative AI kind corresponding to ChatGPT, are very memory-intensive. They have to retailer an unlimited variety of neural weights, or parameters, the matrices which might be the principle practical models of a neural community. These weights improve with every new model of a generative AI program corresponding to a big language mannequin, and they’re trending towards a trillion parameters.

Additionally: Nvidia sweeps AI benchmarks, however Intel brings significant competitors

Additionally throughout the present, Nvidia introduced a number of different merchandise and partnerships.

AI Workbench is a program operating on a neighborhood workstation that makes it simple to add neural web fashions for the cloud in containerized trend. AI Workbench is at the moment signing up customers for early entry.

New workstation configurations for generative AI, from Dell, HP, Lenovo, and others, underneath the “RTX’ model, will mix as many as 4 of the corporate’s “RTX 6000 Ada GPUs,” every of which has 48 gigabytes of reminiscence. Every desktop workstation can present as much as 5,828 trillion floating level operations per second (TFLOPs) of AI efficiency and 192 gigabytes of GPU reminiscence, mentioned Nvidia.

You’ll be able to watch the replay of Huang’s full keynote on the Nvidia web site.

Unleash the Energy of AI with ChatGPT. Our weblog gives in-depth protection of ChatGPT AI expertise, together with newest developments and sensible purposes.

Go to our web site at https://chatgptoai.com/ to study extra.