Harness the Potential of AI Instruments with ChatGPT. Our weblog affords complete insights into the world of AI expertise, showcasing the newest developments and sensible purposes facilitated by ChatGPT’s clever capabilities.

Hewlett Packard Enterprise has at all times taken a decidedly infrastructure-centric method to delivering versatile consumption-based compute, storage, and networking to enterprise IT. HPE gives that flexibility by means of its well-liked HPE GreenLake choices.

HPE shook issues up at its annual HPE Uncover occasion in Las Vegas final week, the place the corporate did not simply develop its definition of GreenLake, it introduced a supercomputer to the get together. It additionally launched the primary of what HPE says can be many domain-specific AI purposes as-a-service. This transfer elevates HPE above its OEM friends who solely ship conventional infrastructure options, putting the corporate instantly into the trail of enterprise digital transformation.

Generative AI within the Enterprise

We now have but to find out the place the flurry of exercise round generative AI is in the end main us. Nonetheless, the expertise is already altering how companies throughout industries take into consideration buyer engagement, decision-making, advertising and marketing, workflow automation, and a dozen different duties. The innovation round Massive Language Fashions (LLMs), reminiscent of ChatGPT, is simply getting began. The listing of use instances is rising by the day.

The problem for IT organizations embracing LLMs is two-fold: the IT infrastructure required to assist LLM coaching is complicated and costly, and current LLM fashions are educated on a broad corpus of information that may make it difficult to customise the expertise for a specific utility.

Fixing the second downside, customizing the info set, typically results in the challenges of the primary, constructing and managing a fancy AI infrastructure. These challenges should be addressed as a result of for an LLM to be absolutely related to a corporation, it should be educated in opposition to organization-specific knowledge.

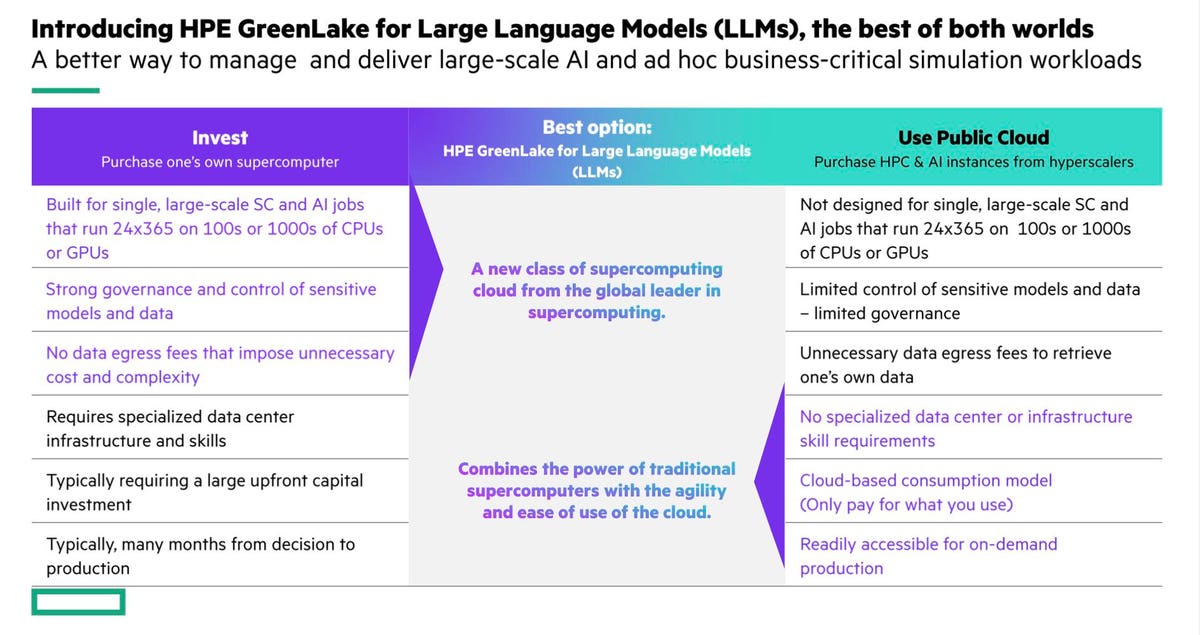

A rising variety of cloud companies supply ChatGPT-as-a-Service, reminiscent of Microsoft’s Azure OpenAI Service and even ChatGPT’s originator, OpenAI. Loads of cloud suppliers are additionally prepared to hire out the infrastructure required to coach and function a big language mannequin; simply decide your favourite CSP. An enterprise-class consumption-based providing for coaching and working LLMs is lacking from the combination. That’s, till final week, when HPE launched its new HPE GreenLake for Massive Language Fashions.

HPE GreenLake for Massive Language Fashions

HPE GreenLake for LLMs offers customers direct entry to a pre-configured LLM stack working on a multi-tenant Cray XD supercomputer. This permits enterprises to coach, tune, and deploy large-scale AI privately with out worrying in regards to the underlying platform. The infrastructure additionally scales as much as 1000’s of CPUs and GPUs, matching the shoppers’ wants. It’s nearly supercomputer-as-a-service.

HPE GreenLake for LLMs

The Aleph Alpha Luminous Mannequin

HPE labored with Aleph Alpha, a German-based AI firm, to supply customers with a pre-trained LLM. Aleph Alpha’s Luminous mannequin permits organizations to leverage their knowledge by coaching and fine-tuning a custom-made mannequin. This provides the LLM the good thing about the enterprise’s knowledge. The Luminous mannequin is obtainable in a number of languages, together with English, French, German, Italian, and Spanish.

Based mostly on a Cray XD Supercomputer

For so long as most IT practitioners can keep in mind, the highest-performing computer systems on the planet got here from Cray, which grew to become part of HPE in 2019. The most recent era Cray XD-series supercomputers present not simply an unprecedented quantity of uncooked compute functionality, it is designed for scalability. The Cray XD I/O subsystem ensures that knowledge flows between nodes, and storage units, at a charge that may hold the GPUs doing LLM coaching from stalling. It is nearly inconceivable to ship this degree of efficiency in a conventional enterprise knowledge heart.

HPE GreenLake for LLMs is predicated on an unspecified Cray XD mannequin powered by a pair of the newest era AMD EPYC processors. The answer additionally consists of eight NVIDIA H100 Tensor Core GPUs and makes use of Cray’s high-performance, low-latency interconnect between nodes.

The NVIDIA H100 is the most effective accelerator accessible right this moment for LLM coaching. In a batch of MLPerf benchmarks launched yesterday, the NVIDIA H100 set per-accelerator efficiency report for the MLPerf v3.0 ChatGPT benchmark. You do not have to know what the underlying {hardware} is when shopping for LLM as a service, however it’s good to know that HPE GreenLake is constructing its service on industry-best expertise.

Absolutely Tuned AI Software program Stack

The software program story for LLM isn’t any much less complicated than the {hardware}, requiring a number of layers of special-purpose frameworks, instruments, and libraries to be expertly configured. It’s rather a lot to tackle. Thankfully, HPE does the heavy lifting for you. HPE GreenLake for LLM consists of the present hottest AI instruments and frameworks together with the mandatory NVIDIA software program stack, all tied along with HPE’s personal HPE Machine Learning Growth Surroundings, Machine Learning Information Administration Software program, and HPE Ezmeral Information Cloth.

HPE GreenLake for LLMs

Analyst’s Take

HPE’s story about GreenLake is that it affords each conventional personal and hybrid-cloud capabilities, supplemented with what HPE phrases “Flex Options” that concentrate on horizontal and vertical workloads. The brand new GreenLake for LLMs expands that into goal AI options.

The corporate guarantees its GreenLake for LLMs will not be its final workload-as-a-service play, teasing upcoming AI-as-a-Service choices reminiscent of local weather modeling, drug discovery, monetary companies, and no matter else its buyer base may ask for. This expands HPE GreenLake from its infrastructure-focused beginnings into a variety of companies that start to look very cloud-like.

HPE GreenLake Portfolio

I like the place HPE is taking GreenLake. The corporate’s technique is sound, and the necessity for the answer is actual. Leveraging its Cray property for demanding AI workloads is just sensible. HPE is, nonetheless, forcing us to consider the corporate in a special gentle. Is it a conventional infrastructure firm that has a consumption-based providing? Or is HPE a brand new sort of cloud supplier? The traces are quickly blurring.

No different expertise firm is providing something akin to what HPE is delivering with its expanded GreenLake options. HPE is offering the versatile consumption-based infrastructure mannequin promised by the cloud, delivered on-prem or in a co-lo. You probably have AI or HPC workloads requiring an infrastructure extra complicated than you need to purchase and keep, then HPE will supply it as a GreenLake AI-as-a-Service. The brand new GreenLake for LLMs providing is simply the primary. I am excited to see what’s subsequent.

Disclosure: Steve McDowell is an {industry} analyst, and NAND Analysis an {industry} analyst agency, that engages in, or has engaged in, analysis, evaluation, and advisory companies with many expertise firms, which can embrace these talked about on this article. Mr. McDowell doesn’t maintain any fairness positions with any firm talked about on this article.

Uncover the huge potentialities of AI instruments by visiting our web site at

https://chatgptoai.com/ to delve deeper into this transformative expertise.

Reviews

There are no reviews yet.